Updated: January 8, 2025

Added a new code!

There are plenty of Anime Genesis codes at launch for all players to unlock a vast number of gems and reroll tokens! This new tower defense game in Roblox takes place in the multiverse, where players can unlock secret characters and build their defense teams.

All Anime Genesis Codes

Anime Genesis Codes (Working)

- UPD1.5: 2000 Gems (New)

- CursedGenesis: 15 Cursed Stones (New)

- HappyNewYear2025: 1000 Gems, 1500 Coins, and 5 Reroll Tokens

- Favorite1.5K: 500 Gems and 3 Reroll Tokens

- MerryChristmas2024: 10 Ice Capsules

- SorryForBug2: Rewards (Level 3+ required)

- Visit400K: 1000 Gems and 5 Reroll Tokens

- UPD1: 1000 Gems

- Starless: 700 Gems

- christmas: 5 Reroll Tokens (Level 5+ required)

- Snowflake: 1 Reroll Tokens

- sorryfordelay: 1000 Gems

- Visit350K: 7 Reroll Tokens

- JoinAnimeGenesisDiscord: 2 Reroll Tokens

- Visit300K: Limited Unit

- Favorite1K: 500 Gems

- Discord10k: 2 Reroll Tokens

- Sub2LIONGAMERCH: 500 Gems and 2 Reroll Tokens

- Visit200K: 700 Gems

- Like1K: 500 Gems and 5 Reroll Tokens

- SorryForDelay0.5: 600 Gems

- UPD0.5: 500 Gems

- SorryForBug1: 5 Reroll Tokens (lvl 5+ required)

- RewampUnit: 500 Gems and 1 Super Lucky Potion

- Kaweenaphat: 500 Gems

- Sub2Watchpixel: 500 Gems

- Discord9k: 3 Reroll Tokens

- Visit100K: 5 Reroll Tokens

- Release: 500 Gems

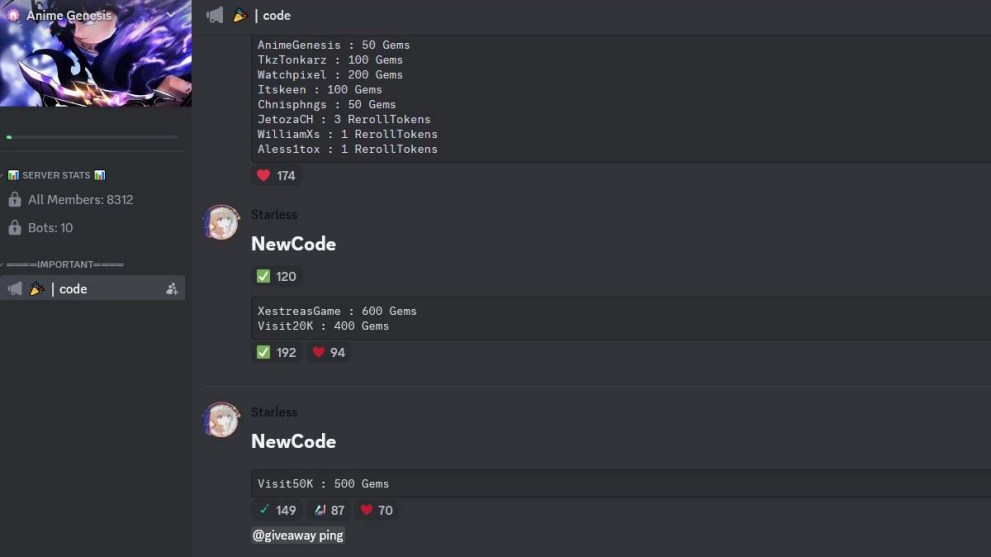

- AnimeGenesis: 50 Gems

- TkzTonkarz: 100 Gems

- Watchpixel: 200 Gems

- Itskeen: 100 Gems

- Chnisphngs: 50 Gems

- JetozaCH: 3 Reroll Tokens

- WilliamXs: 1 Reroll Token

- Aless1tox: 1 Reroll Token

- XestreasGame: 600 Gems

- Visit20K: 400 Gems

- Visit50K: 500 Gems

- Visit80K: 500 Gems

Anime Genesis Codes (Expired)

- No expired codes.

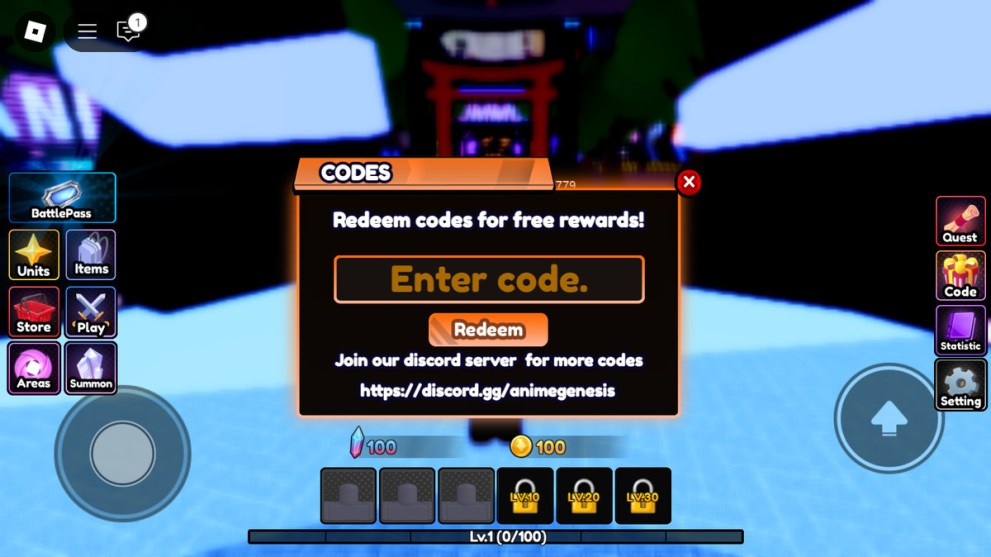

How to Redeem Codes in Anime Genesis

Redeeming codes in this game is extremely easy and requires no extra work. All you have to do is follow these steps:

- Launch Anime Genesis in your Roblox client.

- Tap the “Code” button in the right-hand menu.

- Enter the code into the orange box that appears on the screen.

- Press the “Redeem” button to confirm.

How Do You Get More Anime Genesis Codes?

Xestreas Game, the developer of Anime Genesis, regularly publishes new codes on the game’s official Discord channel. That’s why the very first thing you need to do is join the channel and find new codes listed in the “code” tab under the “Important” section. Note that new codes are published rather often, practically every day.

Also, be sure to check out the “giveaways” tab under the same section to take part in various quizzes that grant extra rewards. These giveaways take place every week, where you need to DM the right answers to the moderator’s questions. Lastly, from time to time, check the “announcements” tab for special private server codes that are given to verified hosts.

Why Are My Codes Not Working?

Some Roblox codes will refuse to work even if you’ve followed all of the steps listed above. This is likely due to a number of reasons. First, always check that the code was copied and pasted correctly. This means checking for misplaced spaces and letters that should not be there. Additionally, some of the codes have a time limit, so always check if they haven’t expired.

If you’re convinced that the code is being entered correctly and the game is still not accepting it, then it’s entirely possible that the Anime Genesis team has disabled that specific code. Although this happens very rarely, still the best course of action is to look for another code and try that instead. Since there are special codes that may be exclusive to private servers, they may not work in public lobbies.

That’s everything you need to know about Anime Genesis codes. For more, check out our Strinova codes, Slayer Online codes, and Capybara Go codes. We’ve also got a Strinova tier list & best builds, King Arthur Legends Rise tier kist, and Haikyuu Touch the Dream tier list.

Published: Jan 8, 2025 07:33 am