Updated: January 20, 2025

Looked for new codes!

Seemingly out of nowhere, Doors has exploded onto the scene in Roblox and has taken the world by storm. Not only are famous streamers streaming it, but tons of gamers are playing it right now. Yes, the first-person horror game is making waves in the Roblox community. If you’re here, you’re probably wondering what all the Doors codes in Roblox are at the moment to help net you some freebies. With that in mind, then, let’s get started, shall we?

All Doors Codes Roblox (Active)

- SIX2025—Redeem for a Revive and 70 Knobs (New)

- 5B—Claim code for a Revive and 100 Knobs (New)

- THEHUNT—Claim code for a Revive

- 4B—Claim code for a Revive

- THREE—Claim code for a revive and Knobs

- SCREECHSUCKS – Redeem this code for 50 Knobs

Roblox Doors Codes (Expired)

- 2BILLIONVISITS—Claim this code for a revive and 100 Knobs

- SORRYBOUTTHAT—Claim this code for 100 Knobs and 1 Revive

- ONEBILLIONVISITS—Claim this code for 100 Knobs, 1 Revive, and 1 Boost

- SORRYFORDELAY—Claim this code for 100 Knobs and 1 Revive

- PSST – Redeem this code for 50 Knobs

- LOOKBEHINDYOU – Redeem the code for 10 Knobs and one Revive

- TEST – Redeem the code for one Knob

- 500MVisits – Redeem the code for 100 Knobs and one Revive

- 100MVISITS – Redeem the code for 100 Knobs and one Revive

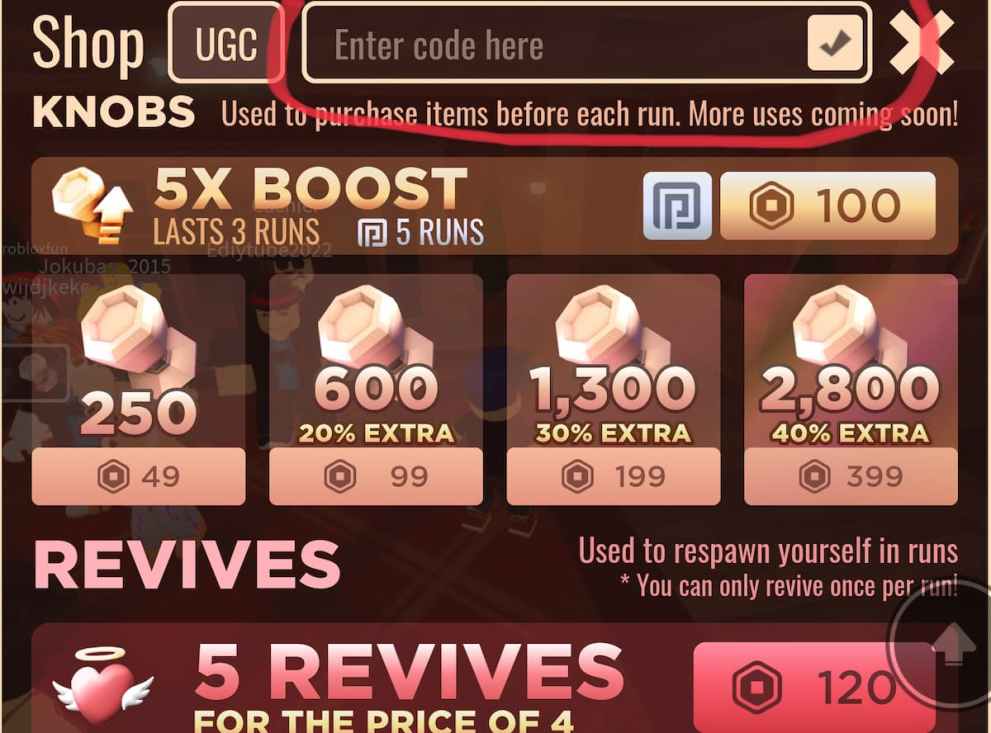

How to Redeem Codes in Roblox Doors

Fortunately, it’s quite straightforward to claim your free rewards in Roblox Doors.

- Firstly, tap on the ‘Shop’ icon on the left of the screen, as pictured below:

- Next, click on the ‘Enter code here’ field at the top of the screen and carefully type in the specific code that you want to redeem. Note that you’ll need to type in the exact code as it appears in the list above for you to unlock the freebie.

All DOORS Achievements and Badges

In Roblox DOORS, there are 42 achievements, 41 of which are achievable while the 42nd can only be earned if you were a QA tester. Here’s the full list:

- ???: Also known as “A-1000.” Reach the end of The Rooms.

- All Figured Out: Use a Crucifix against Figure.

- Back From the Dead: Revive yourself.

- Back On Track: Escape The Rooms.

- Betrayal: Steal a hiding spot from someone right before they die.

- Buddy System: Play a run with a friend.

- Detour: Enter The Rooms.

- Error: Encounter the Glitch.

- Eviction Notice: Get kicked out of a hiding spot by Hide.

- Evil Be Gone: Use a Crucifix successfully.

- Expert Technician: Complete the Hotel’s breaker puzzle in under a minute without any errors.

- Herb of Viridis: Find and use a green herb.

- Hundred Of Many: Encounter your hundredth death.

- I Hate You: Use a Crucifix against Screech.

- In Plain Sight: Hide from an entity by straying out of sight.

- Interconnected: Preserve an item in a Rift.

- I See You: Dodge Screech’s attack.

- It Stares Back: Encounter the Void.

- Join the Group: Join the LSPLASH group (comes with a free revive)

- Look At Me: Survive the Eyes

- Meet Jack: Encounter Jack.

- Meet Timothy: Encounter Timothy while looting.

- One Of Many: Encounter your first death.

- Out Of My Way: Successfully survive Rush.

- Outwitted: Use a Crucifix against Dupe.

- Pls Donate. Fill Jeff’s tip jar.

- QA Tester: A hidden achievement. Earn it by testing stuff.

- Rebound: Survive Ambush.

- Rock Bottom: Escape The Hotel.

- Sshh!: Escape from the Library in the Hotel.

- Stay Out Of My Way: Use a Crucifix against Rush.

- Supporting Small Businesses: Purchase an item from Jeff.

- Take A Breather: Use a Crucifix against Seek.

- Ten Of Many: Encounter your tenth death.

- Two Steps Ahead: Use a Crucifix against Halt.

- Two Steps Forward: Survive Halt.

- Unbound: Use a Crucifix against Ambush.

- Welcome: Join for the first time.

- Welcome Back: Join another day.

- Wrong Room: Get duped by Dupe.

- You Can Run: Successfully run from Seek.

- You Can’t See Me: Use a Crucifix against the Eyes.

All the DOORS Entities in the New Update

A total of six new entities were added in the new update for DOORS, three of which are friendly, two that aren’t, and one that is neutral. Here’s a rundown of what they’re about:

El Goblino

This little fella, El Goblino, is one of the three friendly entities you can encounter in DOORS. You’ll find him hanging out at Jeff’s shop and can even talk to him.

Dupe

Dupe is a tricky entity considering it disguises itself as a door with a number, which of course you’ll want to avoid. To do that, pay close attention to the last door you passed through. If it was, say, 35, then obviously the next door is 36, in which case Dupe will appear as 37 or 38. Notice how the numbers skip? That’s Dupe trying to trick you, so keep your eyes peeled in the Hotel.

Jeff

Another entity like El Goblino and Bob—he’s friendly! In fact, he runs a shop you can buy items from at Door 52. If you encounter his shop, be sure to grab the Crucifix given how handy it is for trapping entities for a short time.

Void

Void is, well, a void; it doesn’t have a tangible form. Void is essentially neutral—neither hostile or friendly, but it can be inadvertently helpful. If you and your friends stray too far from one another, Void will simply teleport you back to them without causing harm.

Snare

Whenever you encounter The Greenhouse—and the Hotel—you’ve entered the domain of the Snare, a little green entity with the ability to snare. The trick to this entity is to have a light source on you so you can see Snare on the ground and avoid it accordingly unless you want to be trapped for several seconds.

Bob

A creepy old, but friendly skeleton; Bob doesn’t do much of anything. With that said, if you interact with him, he’ll kill you. There isn’t an achievement for that, so don’t bother.

So, there you have it. We hope this helped to clue you in on all Doors codes in Roblox. For more on Roblox, here’s how to run in Doors, as well as a detailed list of all Adopt Me pet trade values. Alternatively, feel free to browse the relevant links down below.

Published: Jan 20, 2025 05:47 am